AI Policy

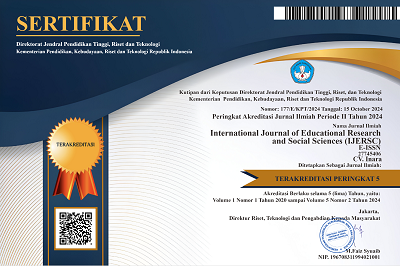

International Journal of Educational Research in Social Culture (IJERSC) implements this AI Policy effective immediately to ensure ethical use of generative AI tools while maintaining research integrity. The policy applies to all submissions at https://ijersc.org/index.php/go/index and follows structures from established Indonesian and international journals.

Introduction and Scope

This policy governs AI use by authors, reviewers, and editors across manuscript preparation, peer review, and publication. Generative AI refers to tools like ChatGPT, Gemini, or Claude that create text, images, data, or code.

Author Responsibilities

Authors may use AI for grammar correction, summarization, or data visualization but must verify all outputs for accuracy. AI cannot be listed as an author, and content generated by AI must not exceed 10% of the manuscript.

-

Include an "AI Declaration Statement" in Methods, Acknowledgments, or a dedicated section: "Authors used [Tool Name, version] for [purpose, e.g., language polishing]. Full content was reviewed and approved by authors."

-

Prohibit AI for data fabrication, falsified references, or generating core novelty.

Reviewer and Editor Guidelines

Reviewers avoid sharing confidential manuscripts with AI tools and use AI only for minor tasks like grammar checks, not judgments. Editors apply AI solely for plagiarism detection and formatting, retaining human oversight for decisions.

Compliance and Sanctions

Non-disclosure or misuse results in manuscript rejection, retraction, or institutional notification. The policy reviews annually per COPE standards; contact IJERSC editors for questions.